Distributed Computing

It took us four thousand years to get from the abacus to the first recognizable computers around World War II. The advanced mathematics required for missile defense and the paranoid atmosphere of the Cold War afterward provided the fertile ground necessary to accelerate this advancement. Within forty years, computers could share files between Hawaii and London in mere moments. This might not seem like much now, but the journey to this point was not straightforward.

The Beginning

Alan Turing first imagined how a ‘computing machine’ could store a program in memory in 1936. It was only theoretical, but he used the word ‘process’ 33 times to explain how the machine could maintain an observable ‘state of mind’ based on its input which could be used to determine its future behavior. At that point, a computer was still a job description for a human being. In 1937, Claude Shannon proved how circuits and relays could be used to represent the boolean algebra created by George Boole almost a century before.

Boolean Algebra showed how actual problems could be solved with just two values and a few simple operators, and Shannon showed how to use existing technology to harness this logic. Within six years, the U.S. military began work on the ENIAC to help calculate ballistics trajectories. While the ENIAC was programmable, it still used the decimal system. Programming was a matter of switches and wires, and it initially required every aspect of the program to be completely rewired each time. Its successor, the EDVAC, was one of the first computers to use binary to store programs in memory.

John von Neumann was a famous mathematician, and he consulted on both projects. He released a paper about his work on the EDVAC where he explained how one could connect an input to an output through stored memory with the use of an automatic computing system. This quickly became known as Von Neumann architecture, and it revolutionized computing. At that point, IBM dominated the industry, but it was not what we imagine today.

The term “super-computing” is sometimes said to have originated in reference to IBM’s accounting machines. The company was built on a new technology for storing and transferring information, the “punch card”. The Industrial Revolution both required and facilitated the processing of data, and IBM was one of the first companies to capitalize on this. They took electromechanical computing to its limits when they helped build the Harvard Mark I, but IBM could see the writing on the wall. Soon, their digital computing mainframes dominated the industry.

The Problem

In the beginning, computers were extremely expensive. These groundbreaking devices didn’t just cost millions of dollars in today’s money, but they also took up entire rooms and usually required multiple people to operate. Once they were generally available, it was very common for queues to form while programmers waited to use the computer. So people were given time limits, and these were often barely long enough to get their programs set up. There was no operating system, so every program had to be built from scratch.

Building on existing technology, programs were soon punched into paper cards at a desk separate from the computer. There’s not much data that you can hold on a piece of paper, so decks of these cards would get processed at once as a single “task” or “job”. To make matters worse, only one task could be processed at a time as the cards must be read in order, and you don’t want to mix up different stacks. Not only did you have to load each program by hand, but one long job would block the entire queue.

As computer processing speed rapidly increased, it quickly became apparent to a number of people that the humans were the slowest part of this entire process. Every moment that the computer sat idle was a waste of money, and it was somebody’s job to schedule and process each task. Soon, it was discovered that you could use magnetic tape to hold the programs in memory. Before long, they started finding ways to process multiple jobs at once.

This made things even more frustrating for the programmers because even small jobs could take forever. You might wait 24 hours just to find out that your program had a bug in it. This was the most primitive form of time-sharing, but this term grew to refer to a wide umbrella of answers to this problem. Some of these solutions evolved into what was originally called multiprogramming.

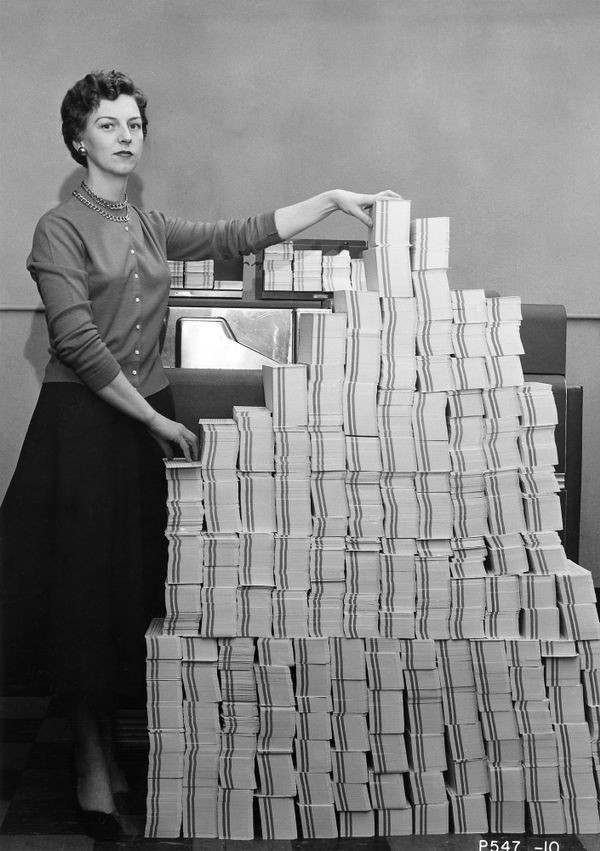

'Programmer standing beside punched cards', 1955. Each punch card can only hold about 80 bytes so this is probably around 5MB of data.

'Programmer standing beside punched cards', 1955. Each punch card can only hold about 80 bytes so this is probably around 5MB of data. Multiprogramming

In the 1950’s, computer processing and memory progressed rapidly as people discovered different ways of storing, processing, and transferring digital information. Two key advancements that went hand in hand were hardware interrupts and virtual memory. Now, computers could hold multiple programs in memory at once and even switch between them. Atlas Supervisor is often called the first operating system, as it was the first program built to coordinate multiple other programs using these tools.

The hardest part of building an operating system is managing multiple processes while keeping things responsive for the user and maintaining the integrity of the data. Interrupts allow the computer to respond to external events in the middle of a program with the use of a predetermined interrupt handler, and it’s also extremely useful for handling exceptions like dividing by zero so that the entire machine doesn’t crash. The operating system also needs to be able to store the state of each program in memory as it quickly switches between them. This is called context switching, and it’s basically impossible to do without virtual memory.

Before long, others were working on similar concepts. This method of time-sharing was developed by Christopher Strachey, and he mentioned it in passing to a gentleman named J.C.R. Licklider. Licklider soon envisioned a Man-Computer Symbiosis with human cognition aided by networks of computers.

Licklider worked at a company called BBN, and he convinced the leadership to build the BBN time-sharing system. He had worked at MIT previously, and he got help from a few of his former coworkers like John McCarthy. Licklider was an effective evangelist of networked computers, and the US government was quick to notice. In 1962, he was appointed head of the Information Processing Techniques Offices of the Advanced Research Projects Agency— soon to be known as DARPA.

Project MAC

Licklider was convinced that computer networking was the future, so he started distributing grants around the country for things like the innovative Project Genie time-sharing system at UC Berkeley. John McCarthy had been advocating for time-sharing at MIT, and they had implemented the Compatible Time-Sharing System in 1961. After Licklider organized a $2 million dollar grant from ARPA, the group at MIT were able to make a few more innovations in what came to be known as Project MAC.

The most influential thing to come out of Project MAC was undoubtedly something called Multics. This innovative operating system was designed to combine open access with security, and it explored many new ways of sharing computer processing and memory. It gave each user their own their own address space inside of its virtual memory as well as access to a few common procedures. Beyond that, Multics was filled with groundbreaking features like a shell between the user and the operating system to make it easier to use.

The main goal of the program was for each user to feel like they had their own computer. To achieve this, MIT built a system of terminals that each had a flexowriter so that multiple people could interact with the mainframe at once. The most innovative touch of all was the process scheduling program that decided which jobs to run based on priority. This central traffic controller could even allow inter-process communication. Each user had their own stack of tasks defined within its own unique process, and Multics even had a primitive version of email.

Licklider made great strides in his two years at ARPA, and others were beginning to see the potential for networked computers. By 1963, he was already imagining what he was calling an Intergalactic Computer Network. We’ll see how this progressed in a moment, but I want to take a quick aside to talk about programming languages. One of Licklider’s biggest issues while planning this network was the vast number of compilers in use at the time.

Programmers

As time-sharing systems became prominent, people were quickly discovering how challenging it could be to coordinate multiple processes on the same computer. Even though they were rapidly becoming more powerful, one intense computation could hold up the entire system. Even worse, it was hard to share memory between processes without them corrupting each other. Project MAC was rapidly innovating on the ground, but it was not immediately influential.

Before we go any further, I want to clarify a few terms. A computer program is the list of instructions given to the computer to complete a task. A process is the current execution state of a program. The execution state or program state is the value of all variables at any given point of program execution. A variable is just a value stored in memory and referenced by a memory address.

To complete a context switch, the operating system must store both the program state and the memory address of the next instruction to be executed in virtual memory. This process control block allows the operating system to rapidly switch between processes in the middle of program execution. In the beginning, every program was single-threaded with only one locus of execution as people were still learning how to control these giant, expensive computers. In fact, the word thread was actually used as a synonym for process in one of the Multics papers.

It would take some time for all of these concepts to fully coalesce because ‘programming’ was nothing more than connecting a room full of wires together at first. I’ve been using the word programmer for convenience, but that was not truly distinguished as a profession until around this point. While Ada Lovelace pioneered the field, most of the people using computers were mathematicians and scientists. They just needed to make difficult calculations, and it wasn’t their job to worry about optimizing the computing process.

Much of the reason this changed was due to the U.S. Military’s response to World War II. As they ordered more and more gigantic contracts like the SAGE missile defense system from IBM, the need for specialists started to become clear. The ENIAC was built to calculate artillery firing tables, and some of the human computers that were hired to assist with it became the first programmers.

“Compilers”

Kathleen Antonelli worked on the ENIAC, and she helped invent the subroutine which was a way to get a computer to repeat parts of the program multiple times. A subroutine is a general term for a reusable block of code, and sometimes these would be called procedures. At this point in history, you can just think of it as a function that changes global state but returns void.

I will also give credit to the programmers who worked on the EDSAC which followed soon afterwards as they pioneered concepts such as Assembly language. Some of the key people involved got together and wrote the first real programming book, The Preparation of Programs for an Electronic Digital Computer. Although Turing had written about similar concepts, they were the first ones to write down how to put these ideas into practice. D.J. Wheeler was one of these engineers, and he first defined a ‘library’ of subroutines in 1952.

The company that built the ENIAC later hired Grace Hopper. Generally, a compiler is a computer program that transforms what you write into something the computer can understand. Each assembly language is highly specific to the computer architecture for which it was built. So, compilers were designed to make programs reusable between computers.

Grace Hopper built A-0 which was called a compiler, but it really just gave programmers access to some common subroutines that made their life easier. She was already working on something called B-0 which would allow programs to be written in plain english. This would later inspire COBOL, but she was beaten to the punch by IBM.

Early Languages

The closest thing to a modern programming language came with FORTRAN in 1957 which was built by John Backus simply because IBM wanted to lower the costs associated with computing. Although a language called Plankalkül was conceptualized in the 1940’s, it was stifled by the fall of Nazi Germany. FORTRAN was proprietary to IBM and not very expressive, so a committee was formed to create a common programming language that computer scientists could share while researching new paradigms. Backus advised this committee, and they designed ALGOL in 1958. They called it IAL at first, and Backus later said this about their intentions:

The Zurich ACM-GAMM Conference had two principal motives in proposing the IAL: (a) To provide a means of communicating numerical methods and other procedures between people, and (b) To provide a means of realizing a stated process on a variety of machines…

This was the first language to fully incorporate Lambda Calculus. They knew the field was changing rapidly, so they decided to iterate on new versions while distinguishing them by the year they were designed. ALGOL 58 introduced the concept of code blocks which was further refined in ALGOL 60 with new features like lexical scope facilitated by a call stack. This allows variables to be enclosed within a function and automatically removed from memory when the function is done.

ALGOL 60 introduced the concept of a thunk which is a way to store a function in memory so that it is not immediately evaluated. A language called Simula was built on top of ALGOL 60, and Simula 67 was notably influential. Simula was intended to complete complex simulations by modelling discrete events within the system, and it introduced exciting new ideas like objects and classes.

John McCarthy from MIT advised on ALGOL 60 after he had built his own language called Lisp in 1958. Lisp pioneered recursion, first-class functions and conditional expressions. Less than fifteen years after the subroutine was first conceived, Lisp was experimenting with dynamic scope by passing functions to other functions so that variable values depend on the current state of the call stack. Lisp had a minimal working interpreter by 1960 which was joined by a full compiler with garbage collection by 1962. To clarify, garbage collection is just the removal of data that is no longer needed from memory so that those memory addresses can be reused.

While FORTRAN was widely used due to IBM’s stranglehold on the computing world at the time, ALGOL was immensely influential. This new ability to express computer programs in text was crucial. Computer programming was at the forefront of mathematics, and even luddites could now join the fray. As time-sharing systems like Project MAC and Project Genie started to be implemented across the world, the need to share this single processor in the most efficient manner grew increasingly important.

Early Concurrency

Edsger W. Dijkstra barely used computers personally, but he helped build the first ALGOL 60 compiler. Later, he worked on an incredibly innovative operating system called THE multiprogramming system. Much of our current thoughts on concurrent programming can be tracked to Dijkstra’s 1965 paper Cooperating Sequential Processes where he defined a bounded buffer as a storage area of finite size to hold data temporarily.

His concepts of semaphores, mutual exclusion, and self-stabilizing finite state machines were extremely influential. Soon, people were discovering the power of combining bounded buffers and semaphores with a First-In-First-Out (FIFO) queue system to coordinate provider-consumer relationships in an attempt to mitigate the perils of race conditions and deadlocks. Tony Hoare built on these primitives with something called a Monitor which helped solve many of the issues caused by multiple users of a single computer sharing resources.

Semaphores are a kind of signal that live in a common memory location between processes. They determine when each process can access shared resources to ensure that these only get used by one process at a time. This mutual exclusion allows synchronization, and this helps prevent data corruption. In the same way that you didn’t want to mix up people’s punch cards when doing batch processing, you also don’t want multiple processes accessing shared values from memory at the same time. So, you need to block access to this critical section until each process is done.

Dijkstra was extremely prolific, and he published articles on numerous topics including garbage collection which is especially difficult when juggling multiple processes. By 1978, Tony Hoare refined his ideas on concurrency into something called Communicating Sequential Processes which incorporated message passing. Message passing can take many forms from functional to object-oriented depending on who you ask, but it’s generally defined as a way of encapsulating behavior within the communication between processes.

The Actor Model, SmallTalk, and Scheme

Carl Hewitt was also greatly influenced by Dijkstra, but he took his own path when developing the Actor Model in 1973. Hewitt believed that Dijkstra’s model was too reliant on global state, so he proposed an alternative that was completely based around asynchronous message passing. Hewitt defined Actors as “computational entities” that can independently send and receive messages from other Actors to which they are connected, and these messages can change their behavior or even create new Actors.

Hewitt had been inspired by Simula 67 and Lisp, but he also thanked someone named Alan Kay in his first paper on the Actor model. Alan Kay had been working on a language called Smalltalk which was also extremely innovative. Kay rejected conventional thought by saying, “why [would anyone] want to divide it up into weaker things called data structures and procedures. Why not divide it up into little computers, as time sharing was starting to?” By Smalltalk-72, the core concept was that everything was an object. He adopted the phrase Object-Oriented Programming, but Kay would later regret this label.

Instead, he would often insist that “the big idea is Messaging”, and that “the mental image was one of separate computers sending requests to other computers that had to be accepted and understood by the receivers before anything could happen.” Dan Ingalls built one of the first virtual machines to bring Kay’s ideas to life. It started out as a fairly simple interpreter, but this would grow quite complicated by 1980. Smalltalk never achieved much mainstream success, but it was extremely influential.

In 1972, while Hewitt was still formulating the Actor model, Gerald Sussman and Guy Steele decided to make a small version of Actors in Lisp to better understand the concept. They were students at MIT where Hewitt was a researcher, and all three of them were involved with Project MAC. Sussman and Steele were impressed by what they built as they thought “all the patterns of control structure that Hewitt had described in terms of actors could equally well be described by the lambda calculus”.

This became Scheme which many consider to be the most influential version of Lisp, and it popularized concepts like closures and continuations. Lisp was so popular in the burgeoning AI community that they even made special machines to run it more efficiently, but it is known to be overwhelming to beginners. Scheme’s simplicity made it a great teaching language. While it will play a major role later in our story, Scheme did not see wide usage outside of academic circles. Hewitt was critical of Scheme as its “hairy control structure” did not achieve the full concurrency he desired.

Concurrent Pascal

In fact, a paper released in 1978 said that high-level language support for concurrency was largely non-existant, but they did take some time to talk about Concurrent Pascal by Per Brinch Hansen. Pascal is notable for being the first typesafe language, and it’s yet another variant of ALGOL 60. Like many others, Per Brinch Hansen had been greatly influenced by Dijkstra. Brinch Hansen, Hoare, and Dijkstra were all working together at the same time, and they are generally considered to be the fathers of concurrent programming.

In 1969, Brinch Hansen built an operating system for something called the RC4000 multiprogramming system. Instead of only serving a single purpose, RC4000 was the first small operating system that was designed to be flexible. Its greatest innovation was ensuring a special protected area of memory called the kernel which formalized some of the concepts seen in Multics. Most operating systems today build on this concept.

Brinch Hansen later built Concurrent Pascal which uses multiple processes coordinated by a monitor using a disk buffer. One issue was that the programmer always had to remember to imperatively call continue as the initial statement was usually intended to execute forever. Still, synchronization of processes within a single computer had never been so easy. In fact, there was no memory protection whatsoever.

Later, he refined this idea into the concept of distributed processes that could be used in real-time programs running across networks of microcomputers. Key to this concept was the ability for “processes to call common procedures defined within other processes” while maintaining no common variables. This is vaguely similar to a Remote Procedure Call which was being conceived elsewhere at the same time. Concurrent Pascal was radically innovative, and Brinch Hansen’s work was influential. I suppose I should explain what I mean by microcomputers.

Microcomputers

Computing was still advancing at a remarkable pace. IBM remained the dominant force, and their ubiquity allowed them to set the standard for the industry as a whole. Each mainframe was still completely unique which made upgrading these expensive machines painful.

So, IBM announced an operating system called System/360 in 1964 that was designed to be compatible with a wide variety of hardware, and it brought a lot of innovation into the mainstream. By 1972, System/360 offered a way for the user to manually split up work within a program. They called these ‘subtasks’, and they used the word time slicing to explain how they rapidly switched between them.

Integrated circuits became generally available around this time, and a young company called Intel released the first microprocessor in 1971. Minicomputers quickly became popular, but don’t get too confused. These things were still usually around the size of a refrigerator at first. Still, computing was becoming more generally available than ever before as the necessary parts continued to grow smaller and cheaper. Soon, a variety of competitors emerged to tap into this new market.

The word minicomputer was mostly an advertising term, so it was no surprise when people started marketing things as microcomputers shorty after that. It was a natural extension from the word microprocesser. By 1973, the first personal computer was released, and it was quickly followed by the Apple I in 1976. It was clear that a sea change had been completed when IBM released the Personal Computer in 1981, and a different type of operating system was needed to take advantage of these new capabilities.

IBM, CP/M, and Microsoft

A man named Gary Kildall began experimenting with Intel’s new microprocessors, and he soon wrote the first operating system for them called CP/M— Control Program for Microcomputers. By today’s standards, it’s just a simple single-user shell, but he pioneered the creation of something called a BIOS. This stands for Basic Input/Output System, and it’s a way to abstract away the hardware so that the operating system could be easily ported to different machines. Combined with its relatively cheap price, CP/M quickly became the standard operating system for microcomputers.

Kildall also wrote a language for microprocessors called PL/M, but versions of BASIC were much more popular. As you might guess from the name, BASIC is very simple as it was originally designed to be a teaching language, but it was quickly adopted by hobbyists like a couple young men named Bill Gates and Paul Allen. Gates and Allen built some BASIC interpreters for various microcomputer companies like Apple and Altair as the first product of their new company, Microsoft— a combination of the words microcomputer and software.

Microsoft’s only other major project until 1981 was a card that helped run CP/M on Apple computers, but they established themselves as the programming language authority in the personal computing world. So, when IBM decided to wade into the PC market, they turned to Microsoft for not just BASIC, but also FORTRAN and COBOL as well. Beyond the languages, IBM needed an operating system. CP/M was the obvious answer, so Gates suggested that IBM should talk to Kildall.

For whatever reason, Kildall’s company rejected working with IBM, so Microsoft gladly stepped in. Microsoft bought a competitor with a compatible interface, and this soon became MS-DOS. Although Kildall and Gates were friends, Gates was extremely competitive. Suddenly, all the software that had been written for CP/M could be used in the new IBM PC, and Microsoft was off to the races as MS-DOS became their flagship product. Before that point, the only operating system they had ever built was actually a version of Unix.

Unix

The Multics operating system was developed at Project MAC as a joint venture with General Electric and Bell Labs. The folks at Bell Labs dropped out of the project in 1969 as they felt like it was moving too slowly. Four of their best engineers had been inspired by Multics, and they wanted to implement some of its ideas on a smaller scale. They later said they wanted to make computing as simple as possible while building “a system around which a community could form”. Using a PDP-7 minicomputer, Ken Thompson began the work that same year.

Dennis Ritchie started helping out, and he began designing the language that would become known as C. C is technically a distant relative of ALGOL-60, but it went through many iterations in between which made it virtually unrecognizable. C found a utilitarian middleground between expressiveness and power, and it gave developers powerful tools like dynamic memory allocation while still being relatively easy to use. Before long, they re-wrote Unix using C. This proved how Ritchie and Thompson were guided by the philosophy of creating tools that would make it easy to build larger tools.

They wanted Unix to be interactive, so they borrowed some ideas from Multics like a shell that starts a new process for each command and a file system that is organized into nested directories. However, unlike the supercomputers at MIT, Unix was intended to run on minicomputers with limited power. While it was much more minimal by design, they gave programmers the power to fix most issues.

They admitted there was “no general inter-process communication or synchronization scheme”, but they did introduce something called a signal. A signal is a system call, and it’s a bit like the software equivalent of a hardware interrupt. The most important of these was fork which allows the developer to create a child process inside a program. The Unix team knew that people could use these primitives to implement things like semaphores from scratch, but they suggested just using a file in that paper.

Other people from Bell Labs started helping out as well. By 1975, pcc was developed which was a Portable Compiler designed to make it easy to implement C on any machine. After this point, Unix grew in popularity as it became well-known for being compact and adaptable. Like all other software at the time, this was completely proprietary to Bell Labs until it spread all the way across the country to Berkeley in 1974.

Pipes and Sockets

Bell had a monopoly on communications. This gave them a huge budget for Bell Labs, but it also landed them in legal trouble numerous times. By the time Unix was being created, Bell was forbidden to compete commercially. Because of this, the license to use Unix was remarkably cheap compared to something like System/360. Soon, the source code was generally available, and a multitude of variants were created thanks to Unix’s unique mixture of “reasonable efficiency and expressive power”.

Unix quickly became popular in both academic and corporate settings, and it was the preferred operating system of computing enthusiasts. It was used in a wide variety of devices by the 1980s, but it was particularly suited for networking. Unix had a simple form of email from the very beginning, and more features were added over time that made it easier to communicate between processes.

A member of the team named Doug McIlroy was inspired by the “data streams” that were made possible by another recent innovation— coroutines. A coroutine is basically just a function that can be temporarily suspended with its state intact which allows multiple parts of a program to work together. Pipes were seen as a way of connecting programs together like connecting pieces of a garden hose to gradually output data.

In 1979, UUCP was created so that Unix machines could communicate through phone lines. By the very next year, people were talking to each other from across the country on Usenet which was originally called the “Unix Users Network”. This was only the beginning. Eventually, the Berkeley version of Unix became the first operating system to incorporate TCP/IP and sockets after Bill Joy implemented an early version of the specification. Unix was so popular that it was used and loved by many of the contractors who built the ARPANET, but I’m getting ahead of myself—let’s backtrack just a bit.